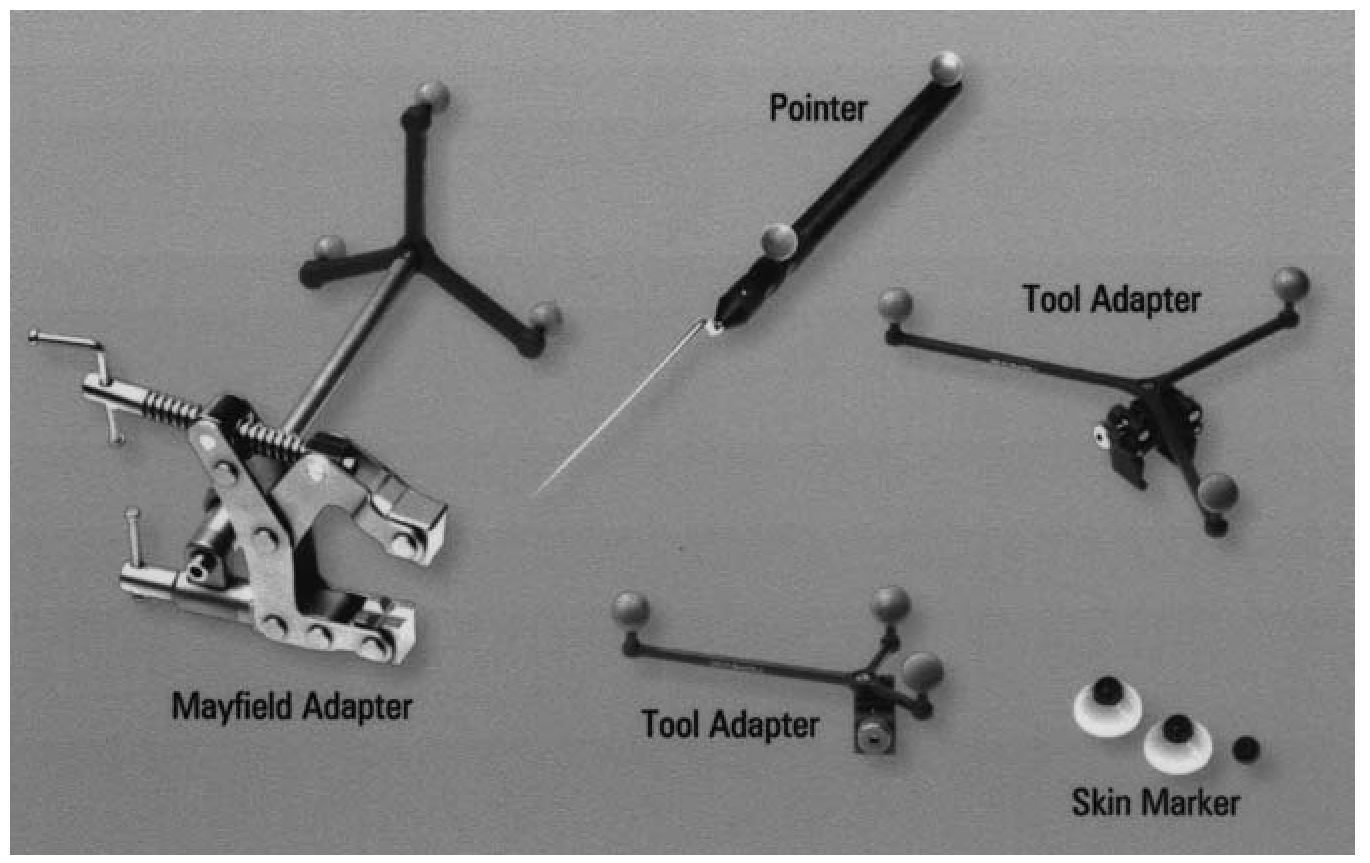

The area where I see a real and immediate use for these high tech AR devices is in the operating room. In my previous life as a medical student, I've spent quite some time in the operating theatre watching surgeons frantically checking if they were cutting the right part of the brain by placing a sharp needle-like pointer (with motion capture dots) on or inside the brain of the patient. The position of the pointer was picked up by 3 infrared cameras and a monitor showed the position of the needle tip in real-time on three 2D views (front, top and side) of the brain reconstructed from CT or MRI scans. This 3D navigation technique is called stereotactic neurosurgery and is an invaluable tool to guide neurosurgical interventions.

Instruments for stereotactic surgery (from

here)

![]()

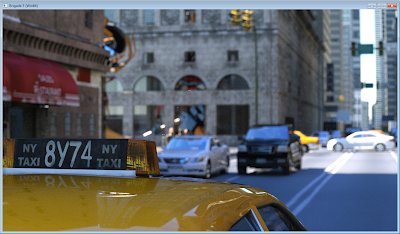

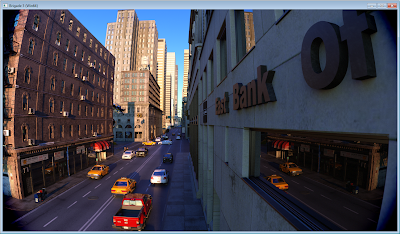

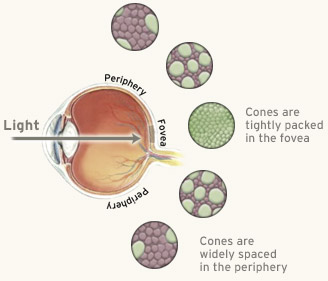

While I was amazed at the accuracy and usefulness of this high tech procedure, I was also imagining ways to improve it, because every time the surgeon checks the position of the pointer on the monitor, he or she loses visual contact with the operating field and "blindly" guiding instruments inside the body is not recommended. A real-time three-dimensional augmented reality overlay that can be viewed from any angle, showing the relative position of the organs of interest (which might be partially or fully covered by other organs and tissues like skin, muscle, fat or bone) would be tremendously helpful provided that the AR display device minimally interferes with the surgical intervention and the augmented 3D images are of such a quality that they seamlessly blend with the real world. The recently announced wearable AR glasses by MS, Sony and Magic Leap seem to take care of the former, but for the latter there is no readily available solution yet. This is where I think real-time ray tracing will play a major role: ray tracing is the highest quality method to visualise medical volumetric data reconstructed from CT and MRI scans. It's actually possible to extend a volume ray caster with physically accurate lighting (soft shadows, ambient occlusion and indirect lighting) to add visual depth cues and have it running in real-time on a machine with multiple high end GPUs. The results are frighteningly realistic. I for one can't wait to test it with one of these magical glasses.

As an update to my previous post, the people behind

Scratch-a-Pixel have launched a v2.0 website, featuring improved and better organised content (still work in progress, but the old website can still be accessed). It's by far the best resource to learn ray tracing programming for both novices (non engineers) and experts. Once you've conquered all the content on Scratch-a-Pixel, I recommend taking a look at the following ray tracing tutorials that come with source code:

-

smallpt from Kevin Beason: an impressively tiny path tracer in 100 lines of C++ code. Make sure to read David Cline's

slides which explain the background details of this marvel.

-

Rayito, by Mike Farnsworth from

Renderspud (currently at Solid Angle working on Arnold Render): a very neatly coded ray/path tracer in C++, featuring path tracing, stratified sampling, lens aperture (depth of field), a simple BVH (with median split), Qt GUI, triangle meshes with obj parser, diffuse/glossy materials, motion blur and a transformation system. Not superfast because of code clarity, but a great way to get familiar with the architecture of a ray tracer

-

Renderer 2.x: a CUDA and C++ ray tracer, featuring a SAH BVH (built with the surface area heuristic for better performance), triangle meshes, a simple GUI and ambient occlusion

-

Peter and Karl's GPU path tracer: a simple, but very fast open source GPU path tracer which supports sphere primitives, raytraced depth of field and subsurface scattering (SSS)

If you're still not satisfied after that and want a deeper understanding, consider the following books:

- "Realistic ray tracing" by Peter Shirley,

- "Ray tracing from the ground up" by Kevin Suffern,

- "Principles of Digital Image Synthesis" by Andrew Glassner, a fantastic and huge resource, freely available

here, which also covers signal processing techniques like Fourier transforms and wavelets (if your calculus is a bit rusty, check out

Khan academy, a great open online platform for engineering level mathematics)

- "Advanced global illumination" by Philip Dutré, Kavita Bala and Philippe Bekaert, another superb resource, covering finite element radiosity and Monte Carlo rendering techniques (path tracing, bidirectional path tracing, Metropolis light transport, importance sampling, ...)