This week Google announced "Seurat", a novel surface lightfield rendering technology which would enable "real-time cinema-quality, photorealistic graphics" on mobile VR devices, developed in collaboration with ILMxLab:

The technology captures all light rays in a scene by pre-rendering it from many different viewpoints. During runtime, entirely new viewpoints are created by interpolating those viewpoints on-the-fly resulting in photoreal reflections and lighting in real-time (http://www.roadtovr.com/googles-seurat-surface-light-field-tech-graphical-breakthrough-mobile-vr/).

At almost the same time, Disney released a paper called "Real-time rendering with compressed animated light fields", demonstrating the feasibility of rendering a Pixar quality 3D movie in real-time where the viewer can actually be part of the scene and walk in between scene elements or characters (according to a predetermined camera path):

Light field rendering in itself is not a new technique and has actually been around for more than 20 years, but has only recently become a viable rendering technique. The first paper was released at Siggraph 1996 ("Light field rendering" by Mark Levoy and Pat Hanrahan) and the method has since been incrementally improved by others. The Stanford university compiled an entire archive of light fields to accompany the Siggraph paper from 1996 which can be found at http://graphics.stanford.edu/software/lightpack/lifs.html. A more up-to-date archive of photography-based light fields can be found at http://lightfield.stanford.edu/lfs.html

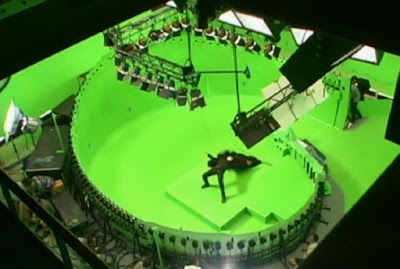

One of the first movies that showed a practical use for light fields is The Matrix from 1999, where an array of cameras firing at the same time (or in rapid succession) made it possible to pan around an actor to create a super slow motion effect ("bullet time"):

|

| Bullet time in The Matrix (1999) |

Rendering the light field

Instead of attempting to explain the theory behind light fields (for which there are plenty of excellent online sources), the main focus of this post is to show how to quickly get started with rendering a synthetic light field using Blender Cycles and some open-source plug-ins. If you're interested in a crash course on light fields, check out Joan Charmant's video tutorial below, which explains the basics of implementing a light field renderer:

The following video demonstrates light fields rendered with Cycles:

Rendering a light field is actually surprisingly easy with Blender's Cycles and doesn't require much technical expertise (besides knowing how to build the plugins). For this tutorial, we'll use a couple of open source plug-ins:

Rendering a light field is actually surprisingly easy with Blender's Cycles and doesn't require much technical expertise (besides knowing how to build the plugins). For this tutorial, we'll use a couple of open source plug-ins:

1) The first one is the light field camera grid add-on for Blender made by Katrin Honauer and Ole Johanssen from the Heidelberg University in Germany:

This plug-in sets up a camera grid in Blender and renders the scene from each camera using the Cycles path tracing engine. Good results can be obtained with a grid of 17 by 17 cameras with a distance of 10 cm between neighbouring cameras. For high quality, a 33-by-33 camera grid with an inter-camera distance of 5 cm is recommended.

|

| 3-by-3 camera grid with their overlapping frustrums |

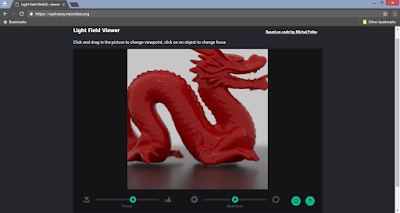

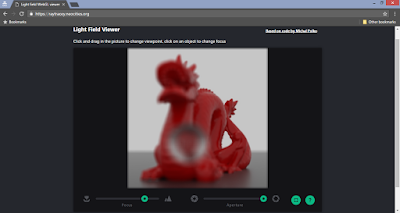

2) The second tool is the light field encoder and WebGL based light field viewer, created by Michal Polko, found at https://github.com/mpk/lightfield (build instructions are included in the readme file).

This plugin takes in all the images generated by the first plug-in and compresses them by keeping some keyframes and encoding the delta in the remaining intermediary frames. The viewer is WebGL based and makes use of virtual texturing (similar to Carmack's mega-textures) for fast, on-the-fly reconstruction of new viewpoints from pre-rendered viewpoints (via hardware accelerated bilinear interpolation on the GPU).

This plugin takes in all the images generated by the first plug-in and compresses them by keeping some keyframes and encoding the delta in the remaining intermediary frames. The viewer is WebGL based and makes use of virtual texturing (similar to Carmack's mega-textures) for fast, on-the-fly reconstruction of new viewpoints from pre-rendered viewpoints (via hardware accelerated bilinear interpolation on the GPU).

Results and Live Demo

A live online demo of the light field with the dragon can be seen here:

You can change the viewpoint (within the limits of the original camera grid) and refocus the image in real-time by clicking on the image.

I rendered the Stanford dragon using a 17 by 17 camera grid and distance of 5 cm between adjacent cameras. The light field was created by rendering the scene from 289 (17x17) different camera viewpoints, which took about 6 minutes in total (about 1 to 2 seconds rendertime per 512x512 image on a good GPU). The 289 renders are then highly compressed (for this scene, the 107 MB large batch of 289 images was compressed down to only 3 MB!).

A depth map is also created at the same time an enables on-the-fly refocusing of the image, by interpolating information from several images,

A later tutorial will add a bit more freedom to the camera, allowing for rotation and zooming.